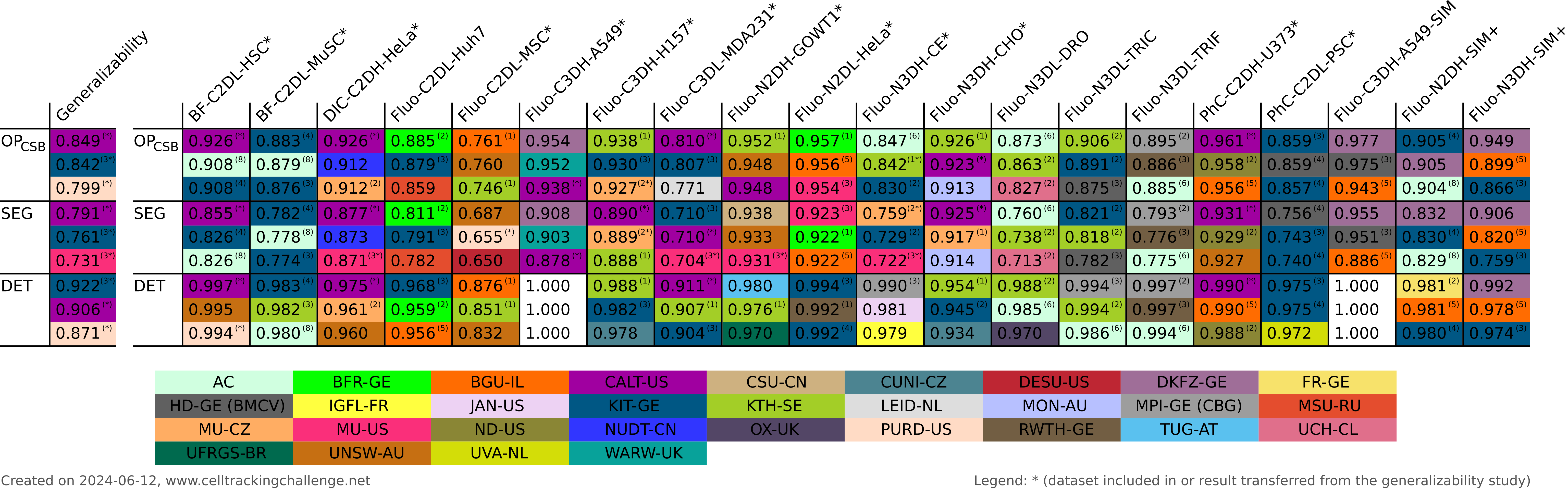

The following table presents the best three results across the 13 included datasets for generalizable submissions and the best three results per dataset for regular submissions, both being evaluated using the OPCSB, SEG, and DET measures. The detailed evaluation of technical performance of all generalizable submissions can be found in this document, and that of all regular submissions is available here. Refer to Participants for detailed descriptions of individual algorithms and to this document for the mapping between the tags currently used for individual algorithms and their past alternatives.

2024-06-12: New regular submission NUDT-CN included.

2024-04-10: The regular submission DESU-US updated.

2023-12-18: New regular submission AC (8) included.

2023-11-10: The regular submission AC (6) updated.

2023-08-01: The benchmark opened for online generalizable submissions.

2023-04-11: New regular submission UCH-CL (2) included. The regular submission UCH-CL renamed to UCH-CL (1).

2023-02-27: New regular submissions AC (6) and HD-GE (BMCV) (4) included.

2022-11-05: New regular submission UFRGS-BR included.

2022-06-01: The regular submission AC (5) renamed to BGU-IL (5). The invited generalizable submissions transferred.